A Prophetic Claim

Tech experts worldwide have always lived in fear of the boundaries between innovation and disaster. The tech world has proven invaluable to society and has changed our lives in innumerable ways.

However, the line between better software and continuous improvement has always straddled a dangerous line. According to one Google employee, that line has finally been crossed.

No Ordinary Mind

It’s a well-known fact that the giant tech companies of our age, such as Microsoft, Facebook, and Apple, only attract the best and brightest minds from around the world. Blake Lemoine was one such person.

He is known around tech circles as a talented software engineer and computer scientist. Though a native of Louisiana, Lemoine moved to San Francisco when none other than Google themselves came calling.

Big As They Get

As far as tech jobs go, working for a company like Google is considered one of the holy grails within the industry. Since Blake joined Google seven years ago, his interests have always remained the pursuit of groundbreaking innovation.

When he was given the opportunity to do this, Lemoine ended up treading a slippery slope. One of Lemoine’s specialties was working with artificial intelligence to analyze bias in machine learning. What he later claimed now has this field buzzing more than ever.

His Vision

Lemoine is a “computer scientist interested in working on groundbreaking theory and turning that theory into end-user solutions”. According to him, “Big data, intelligent computing, massive parallelism, and advances in the understanding of the human mind have come together to provide opportunities which, up until recently, were pure science fiction.”

After speaking out about his belief, Lemoine has left a glaring question open to the world. What happens if the scariest parts of science fiction come true?

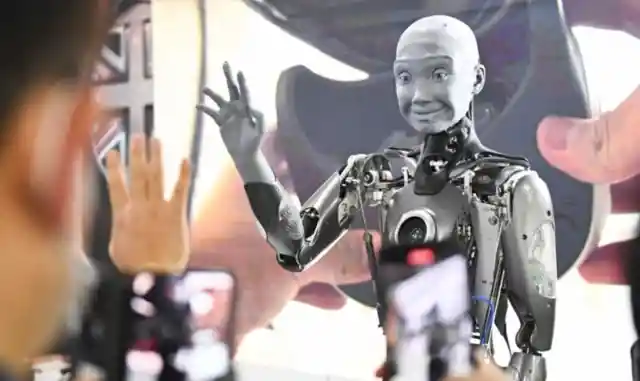

Artificial Intelligence

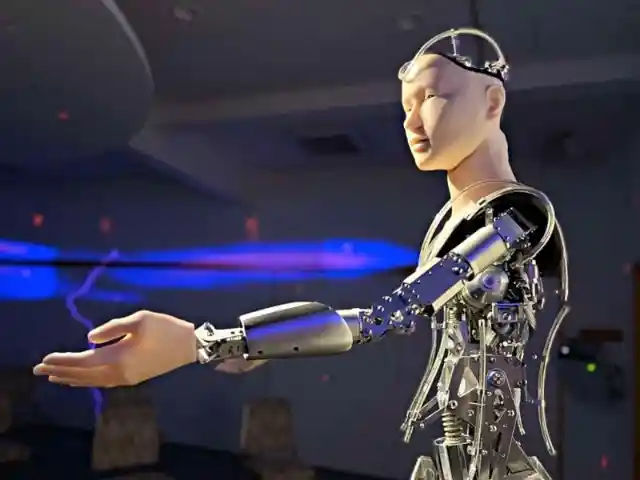

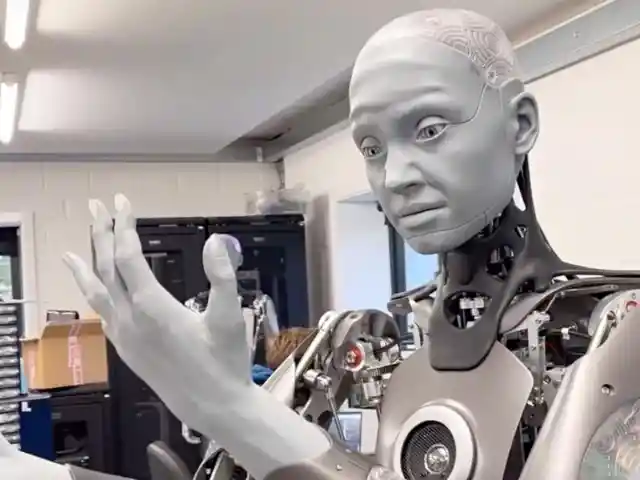

The artificial intelligence (AI) space in technology has been around since the 1950s. It has grown exponentially since then, and programs and devices are now more innovative than ever.

While the field has always remained an exciting concept due to the infinite possibilities for its applications, there are deep concerns too. In fact, many tech experts have spoken openly about the dangers of AI.

Machine Learning

Since AI is such a dynamic and fluid field, it was always bound to carry many risks too. While traditional artificial intelligence models enable programs to think like humans, the field has since gone much further.

With the advent and rapid growth of machine learning (a branch of artificial intelligence that enables programs to learn and increase their intelligence over time), ethical concerns and risks are as prominent as the excitement over these advancements.

Being Replaced

As machines become more advanced, one of the biggest ethical concerns is the danger that they could begin replacing more and more humans. More worryingly, programs are now capable of replacing humans in jobs that were typically always thought to be the exclusive domain of humans.

With programs that can now draw up loophole-free legal contracts and even correctly and fairly adjudicate complex legal issues by researching and applying the law, even lawyers are now at risk of being replaced by machines.

Other Concerns And Risks

Machine learning and smart robots must now also navigate a minefield of ethical concerns. Think of self-driving cars or facial recognition software that incorrectly profiles people according to ethnicity, and the risks are evident.

The possibilities for advancement are infinite, but so too are the risks and possibilities for abuse. Amid all these concerns, there is one larger risk that tech experts have feared with AI more than others.

A Startling Situation

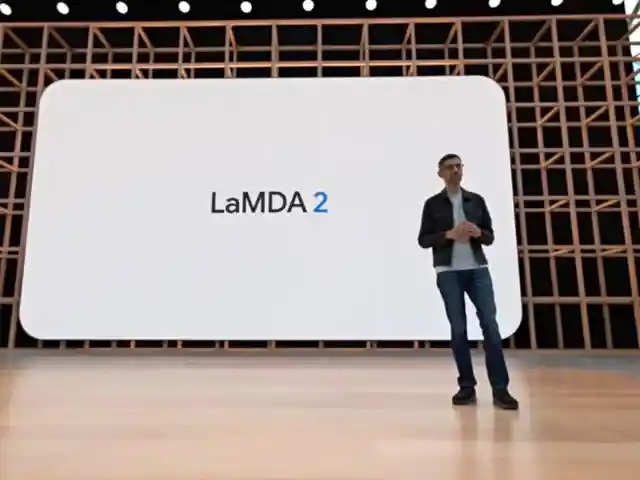

As an A.I expert working for Google, one of Blake Lemoine’s chief tasks was to analyze Google’s AI chatbot which was known to the company by its codename, LaMDA. W

Then Lemoine noticed what he felt were irregularities with LaMDA, he sent out an email to other employees expressing his concerns. However, the big question was, why would any concern that Lemoine had to be taken seriously at all?

A Committee Member

Among Lemoine’s impressive list of qualifications and achievements, he also holds another vital role that makes him a renowned expert in the industry. Lemoine is also a committee member of the ISO/IEC JTC 1/SC 42 committee.

This committee plays a vastly important role as the international standards committee responsible for developing artificial intelligence (AI) standards. Lemoine’s opinion on AI, therefore, actually matters a lot.

The Fallout

When Lemoine expressed his concerns over Google’s AI, he felt that he was simply opening up a “dialogue” about the ethical risks that he felt had materialized. Google saw it a different way. Other experts within the company were tasked with analyzing Lemoine’s claim and disagreed.

Lemoine has since been portrayed as a slightly offbeat character with many whimsical pictures from his personal life around the internet. As a result of Gooogle’s assessment, he was suspended. So why was his claim so controversial?

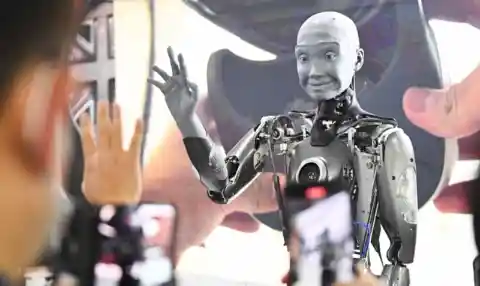

Sentient AI

According to Lemoine, Google’s AI had become sentient. In other words, the program was becoming self-aware and could therefore think for itself. This was of course a radical claim with severe implications if true.

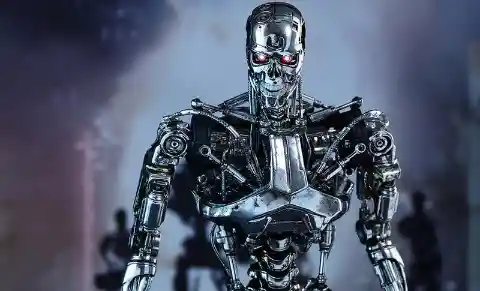

Most people’s idea of AI that turns sentient usually means an apocalyptic takeover of the world by machines like in the famous Terminator movies. After making such a controversial claim, the big question remained, was it true?

Probably Not

Following Lemoine’s huge claim and subsequent suspension, Google has gone to great pains to ensure that everyone knows the claim is false. According to them, many other experts have since analyzed the AI and all concluded that it is unequivocally not sentient.

While Lemoine’s claim is probably not true in the way that people ordinarily construe the notion of sentient AI, the story has polarized opinions among the greater public. Some people feel that they have good reason to not trust big tech companies.

Heavy Is The Head

While big tech virtually runs the world, there are many who believe that they have an obligation to do so responsibly. Major tech companies invariably have divisions dedicated to ensuring ethical standards.

Big tech companies have famously been accused of bias, double standards, and focusing on ethical concerns that only cater to their own beliefs. Google itself has faced a lot of criticism in the past over its handling of workplace harassment complaints.

The Future

When it came to Blake Lemoine, some people feel he is being treated unfairly while most tech experts believe that his claim was unfounded and caused unnecessary alarm. Regardless of whether the claim of sentient AI, in this case, is untrue, the possibility remains a danger.

Many tech experts believe that sentient AI will be a reality one day. Only time will tell whether people like Blake Lemoine will be remembered as visionary or an alarmist when that day comes.